【科技新知】AI教父Yann LeCun:「我已經對大型語言模型失去興趣」預言AI新革命—「世界模型」

「語言模型的黃金時代已結束!」Meta首席AI教父、圖靈獎得主Yann LeCun震撼預言:真正的AI革命,從理解世界、打造人類級「世界模型」才剛要啟動!現有大型語言模型(LLM)已走到極限,未來AI必須擁有推理、記憶與規劃能力,才能真正跨越智慧門檻。下一波全球AI創新浪潮,將由開放式合作、突破性硬體與全新思維引爆——這場革命,才剛開始。

大型語言模型的極限:AI未來需要更深層次的智慧

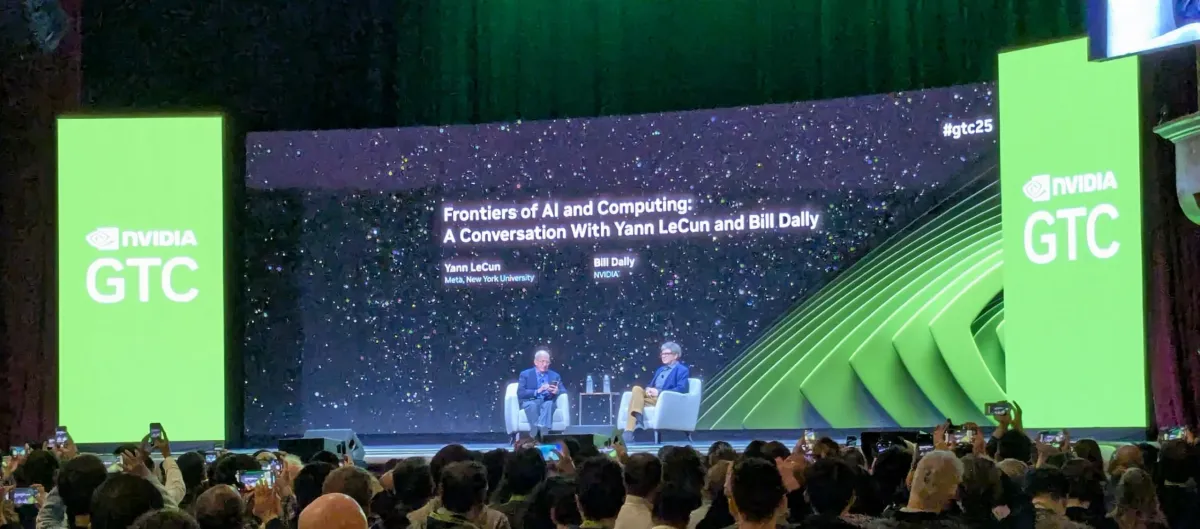

在NVIDIA GTC 2025 (GPU Technology Conference)論壇上,當被問到過去一年最令人興奮的AI進展時,Meta首席科學家、圖靈獎得主Yann LeCun給出了令人意外的回答:他已經對大型語言模型(LLM)失去興趣。

原因很簡單:目前的LLM主要靠堆疊更多資料、算力和合成數據來進行微幅優化,缺乏真正的概念性突破。

LeCun指出,現有的LLM推理方式過於簡化,無法支撐未來智慧系統的需求。

真正讓他興奮的,是AI必須解決的四大挑戰:

- 理解物理世界:讓機器能建立內在的世界模型

- 持續記憶:打造能夠長期記憶的機器

- 真實推理:超越目前淺層、表面的推理

- 自主規劃:使AI能夠預測未來並制定行動計畫

LeCun預言,這些領域將在未來五年內成為AI界最熱門的研究核心。

世界模型:從模擬現實到真正思考的AI基礎

LeCun強調,要讓AI真正理解與推理,必須建構「世界模型(World Models)」。

這種模型模仿人類與動物的自然學習過程——不是靠語言,而是透過感知經驗來理解物理世界。

舉例來說:

- 我們本能地知道,推倒瓶子的不同部位,會產生不同的結果;

- 九個月大的嬰兒就能分辨漂浮物是否違反重力規則。

這種直覺式的物理理解,遠比單靠語言推理來得複雜。

LeCun直言,目前LLM透過token來離散表示世界的方式,是行不通的,因為:

- 現實世界是連續且高維的,不是幾十萬個token就能描述;

- 嘗試逐像素預測視覺訊號,只會消耗巨量算力,且無法避免不可預測的雜訊。

解決方案是聯合嵌入預測架構(JEPA),其核心特點是:

- 忽略無關細節,只在抽象特徵空間中預測未來變化;

- 更符合推理與規劃需求,且訓練成本更低。

成功案例如DINO、DINOv2與I-JEPA,已證明這種方法能學到更深層且更有用的表徵。

AMI時代來臨:超越AGI的開源式智慧革命

談到AGI(通用人工智慧),LeCun指出:

人類智慧本身就是專業化的,並不是真正的「通用」智慧。因此,他更主張以「AMI(Advanced Machine Intelligence)」取而代之。

他提出AI未來發展路徑:

- 3至5年內出現小型、具推理與規劃能力的AMI系統

- 約十年後,AMI規模將接近人類智慧水準

但LeCun強調:

單純擴大LLM規模是無法到達這個目標的,關鍵是要設計出能夠理解世界的全新AI架構。

推動這場革命的力量,必須來自於全球開源合作:

- 如ResNet的誕生來自微軟北京研究院;

- Llama模型開源後,全球下載量超過10億次,激發無數創新。

LeCun堅信,未來封閉平台將逐漸消失,唯有開源與共享,才能培育出真正多樣、跨文化、全球適用的AI生態。

硬體革命與AI推理新挑戰:System 2正在崛起

LeCun指出,未來AMI系統的核心,不再是像現有LLM那樣的快速反應(System 1),而是具備深度推理與規劃能力的System 2智慧。

但System 2推理會比目前推理更耗能,對硬體提出全新挑戰。

他對未來硬體趨勢的觀察包括:

- 神經形態晶片(Neuromorphic Chips):非常適合低功耗邊緣運算,能大幅減少資料移動成本;

- 光學計算:迄今仍未達預期成效;

- 量子計算:LeCun對其在AI領域的實用性持高度懷疑態度。

總結來看,短期內AI仍將依賴極致優化的數位CMOS技術作為運算基礎。

LeCun最後強調:

無論是理解世界、推理規劃,或是支撐未來AMI的誕生,「開源」都是唯一正途。

未來的人類,不是被AI取代,而是成為超級智慧助理的指揮官。

Yann LeCun Says He Not Interested In LLMs Anymore —— From World Models to Human-Level Intelligence: The Next AI Frontier

The Limits of LLMs: Why Future AI Needs Deeper Intelligence

At GTC 2025, when asked about the most exciting AI breakthroughs over the past year, Meta's Chief AI Scientist Yann LeCun surprised the audience: he is no longer interested in Large Language Models (LLMs).

The reason is simple. Today's LLMs achieve mostly incremental gains—scaling up data, compute, and synthetic datasets—but lack true conceptual innovation.

Moreover, LeCun criticizes current LLMs for relying on overly simplistic reasoning, which is insufficient for building future intelligent systems.

Instead, he highlights four critical challenges that AI must tackle:

- Understanding the Physical World: Building internal world models

- Persistent Memory: Enabling long-term memory in machines

- True Reasoning: Moving beyond shallow inference

- Autonomous Planning: Equipping AI to predict and plan actions

LeCun predicts that within five years, these fields will become the hottest topics in AI research.

World Models: From Simulating Reality to Building Thinking Machines

To achieve genuine understanding and reasoning, LeCun argues that AI needs to develop World Models—internal cognitive simulations of how the world works, learned through perception rather than language.

For example:

- Intuitively knowing that pushing a bottle at different points yields different outcomes

- Infants as young as nine months detecting when physical laws like gravity are violated

This intuitive grasp of the physical world is vastly more complex than language prediction.

LeCun explains why current token-based LLMs cannot build true world understanding:

- The real world is continuous and high-dimensional, unlike the discrete tokens (~100k vocabulary) used by LLMs.

- Predicting raw video pixels fails because it wastes resources on inherently unpredictable noise (e.g., exact leaf movements, random crowd faces).

The solution?

LeCun advocates for Joint Embedding Predictive Architectures (JEPA), which:

- Learn abstract representations of sensory input (images, video)

- Predict future changes within this abstract feature space, ignoring irrelevant details

- Are more efficient and better suited for reasoning and planning

Successful examples like DINO, DINOv2, and I-JEPA already validate this approach, showing deeper representations with lower training costs.

The Rise of AMI: Open Innovation Beyond AGI

Regarding Artificial General Intelligence (AGI), LeCun suggests a different framing:

Human intelligence is highly specialized, not truly "general." Thus, he proposes focusing on Advanced Machine Intelligence (AMI) instead.

His roadmap:

- Small-scale AMI systems capable of reasoning and planning: within 3–5 years

- Human-level AMI: perhaps within a decade, through gradual progress

Crucially, LeCun stresses that scaling up LLMs alone will not get us there.

New architectures—rooted in world understanding—are essential.

Moreover, the next AI revolution must come from global open collaboration, not isolated tech giants:

- ResNet, one of the most-cited AI papers, came from Microsoft Research Beijing.

- Meta's open-sourcing of Llama led to over 1 billion downloads, sparking massive innovation worldwide.

LeCun firmly believes that closed, proprietary platforms will eventually disappear, replaced by open, community-driven ecosystems that foster diversity and progress.

Hardware Frontiers and the Rise of System 2 AI

LeCun points out that future AMI systems must evolve beyond today's fast, intuitive System 1 thinking (reflexive and reactive) toward deliberate, thoughtful System 2 reasoning.

However, System 2 reasoning—enabling planning and complex cognition—will be far more computationally expensive, demanding new hardware capabilities.

LeCun's views on hardware:

- Neuromorphic/Analog Chips: Promising for edge devices, minimizing energy from data movement

- Optical Computing: Disappointing after decades of research

- Quantum Computing: Highly skeptical for AI relevance (except quantum simulation)

In the short term, AI will continue relying on highly optimized digital CMOS technologies.

LeCun concludes with a clear message:

"Openness is the only way forward—for building world models, achieving true reasoning, and advancing AMI."

In the future, humans won't be replaced by AI.

Instead, we will manage armies of highly capable intelligent assistants, shaping a new era of human-machine collaboration.

image by blognone

news source: NVIDIA Developer